|

BioRobotics Surgical Simulation

|

Visit the new home of the BioRobotics Surgical Simulation research group to learn about our most recent developments and projects in the realm of virtual surgery. We are currently working on advanced visual and haptic rendering techniques for building a virtual simulation environment as well as investigating the prospects of using pre-operative medical image data to generate patient-specific computer models of surgically relevant anatomy.

[external link]

|

|

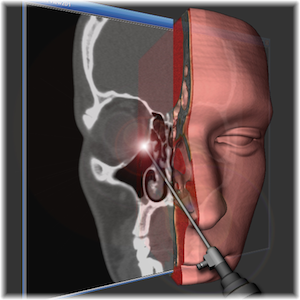

Visuohaptic Surgical Simulation

|

We are currently collaborating with faculty in the medical

school to apply techniques in haptic and graphic rendering to surgical

simulation. We hope to develop a training environment that can be used

to teach both sensorimotor-level and task-level skills to surgical residents.

We are currently focusing on the simulation of temporal bone surgery, with a

particular emphasis on modeling the behavior of a virtual drill and its

contact with bone tissue. We are also developing a high-level scripting

language that will allow instructors to provide a task-level description of a

procedure for training and evaluation.

Our simulation environment is based around the CHAI 3D library for haptics and

visualization.

|

|

Standardized Evaluation of Haptic Rendering Systems

|

It is typically very difficult to convey a result in haptics in print. If

I write a paper saying that my new haptic rendering algorithm is great, it's

nearly impossible for a reader to subjectively evaluate my results without

trying my system in person. Therefore haptics as a field would benefit from

standardized approaches to evaluating results. This project aims to provide a standard set of metrics to the haptics community.

|

|

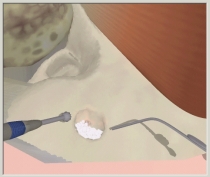

Event-Driven Surgical Simulation

|

Existing surgical simulators provide a physical simulation that can help a trainee develop the hand-eye coordination and motor skills necessary for specific tasks, such as cutting or suturing. However, it is equally important for a surgeon to gain experience in the cognitive processes involved in performing an entire procedure. The surgeon must be able to perform the correct tasks in the correct sequence, and must be able to quickly and appropriately respond to any unexpected events or mistakes.

We are developing a framework for a full-procedure surgical simulator that incorporates an ability to detect discrete events, and that uses these events to track the logical flow of the procedure as performed by the trainee. In addition, we are developing a scripting language that allows an experienced surgeon to precisely specify the logical flow of a procedure without the need for programming. The utility of the framework is illustrated through its application to a mastoidectomy.

|

|

Passivity analysis of Haptic Devices

|

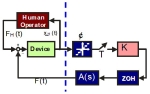

Instability is a common phenomenon in haptic rendering, one of the primary limiting factors on the immersiveness of visuohaptic simulations. The stability of haptic devices has been studied in the past by various groups (Colgate, Hannaford, Gillespie, ...). Past analysis has not focused on quantization, Coulomb friction and amplifier dynamics. Our work extends such results.

|

|

Haptic Asymmetry

|

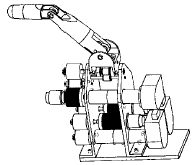

Haptic interfaces enable us to interact with virtual objects by sensing our

actions and communicating them to a virtual environment. A haptic interface

with force feedback capability will provide sensory information back to the

user thus communicating the consequences of his/her actions.

Ideally haptic devices should be built employing an equal number of sensors and

actuators, fully mapping actions and reactions between user and virtual

environment. As the number of degrees of freedom for haptic devices increases,

however, a possible scenario is that devices will feature more sensors than

actuators, given that the former are usually smaller, lighter and cheaper than

the latter.

What are the effects of using this type of haptic devices, which we refer to as

"asymmetric"? As it turned out in our past research while asymmetric devices

can enable more rich exploratory interactions, the lack for equal

dimensionality in force feedback can lead to interactions which are

energetically non-conservative. In our present work we are investigating how

to create haptic rendering software that will limit such non-conservative

effects and testing how these effects are perceived by users.

|

| Mechanisms for Human-Friendly Robotics  | Safety currently represents a major barrier preventing the incorporation of robotics into the typical home/work environment. This project aims to develop hardware and software that will allow large robots to safely share an environment with humans, and to safely interact with humans. The immediate focus is on the design and control of mechanisms that prevent unsafe and potentially harmful forces from being generated by a robotic arm, while still allowing the arm to perform useful tasks. |

| Multi-finger manipulation  | This project aims to develop algorithms to allow complex, multi-fingered hands to perform grasping and manipulation tasks. An additional product of this work is the Robot Servo Control System, a RealTime-Linux-based environment for developing real-time robotic control algorithms. |